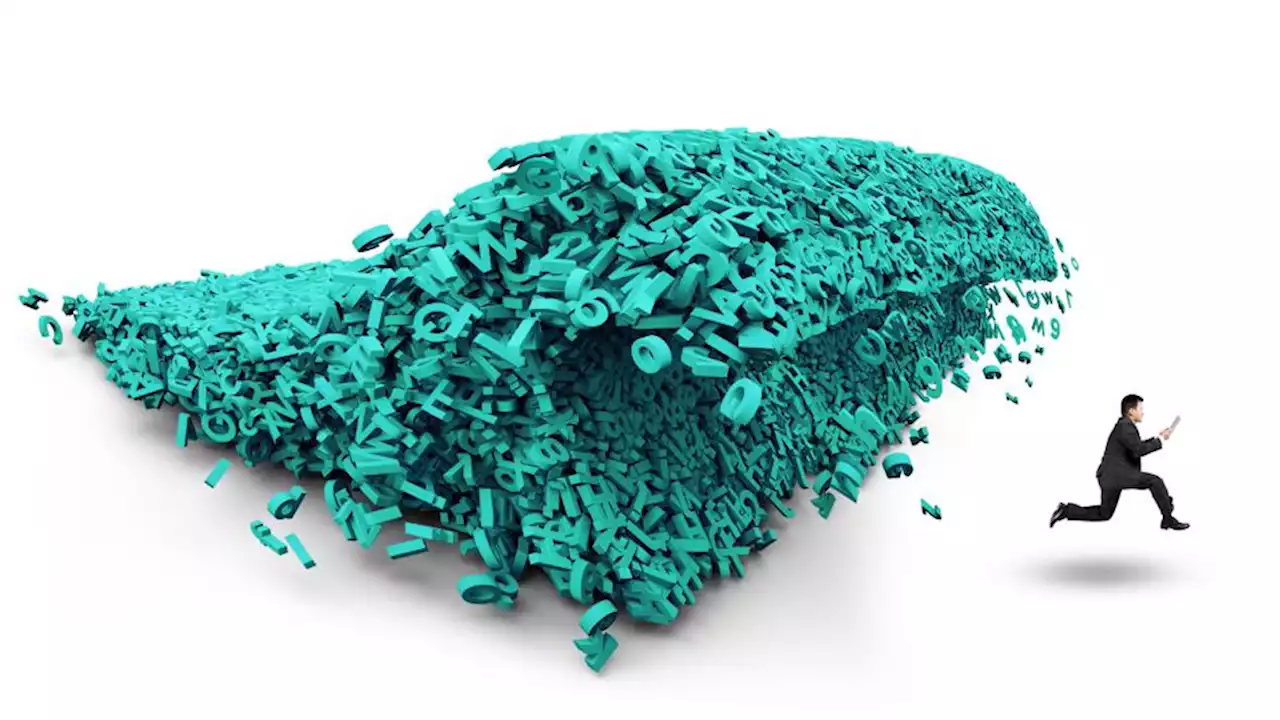

A data-centric approach to help manage the data tsunami.

But who controls the multitude of copies? How do you ascertain that the data in the copy you are using is valid at that point-in-time? How do you make sure the copy is not a fake? And what about the constantly increasing cost of storing multiple copies of the same data and of “data integration,” the process of making a copy of the data available for a specific application?

“Today, data is more distributed than ever in multicloud, intercloud and hybrid architectures. The need for skills and technologies to manage this complexity and financial governance across the cloud infrastructure is creating opportunities for innovation.” That concept served well Cinchy’s focus on eliminating data integrations and the myriad of copies they produce. In 2019, however, “data mesh” was introduced as the solution to the challenges of distributed data, “distracting the market,” and slowing “adoption for the data fabric,” according to Gartner.

The decision to create a new market category comes at the point in the life of a startup when market confusion slows adoption. Or when a new approach—in this case, to data management—needs a larger vision and a label that will brand it as the new new thing, the next evolutionary leap. For example, “the data lakehouse focuses on analytics use cases. It cannot serve as the backend for an operational application in the way dataware does.” And “A traditional data fabric will always require applications to have their own databases that connect to the network, however, while dataware allows developers to build new applications without dedicated operational databases.”