They have also released the tools needed for people to train their own AI.

to the tune of billions suggest that training AI models is hard. But researchers at Stanford seem to have done it at a modest budget that could allow AI companies to be spun out of garages.A critical component of this achievement was LLaMA 7B, an open-source language model, which the researchers got access to. Interestingly, this model comes from Meta, Mark Zuckerberg's company, and is one of the smallest and most low-cost language models available today.

Trained on trillion tokens, the language model has some capabilities that are equipped with but nowhere close to the levels that we have seen with ChatGPT. The researchers then turned to GPT, the AI behind the chatbot, and used an Application Programming Interface to use 175 human-written instruction/output pairs to generate more in the same style and format.Generating 20 such statements at a time, the researchers amassed 52,000 sample conversations in very little time, which cost them $500.

The trained model, dubbed, Alpaca was then tested against ChatGPT itself in various domains and beat GPT in its own game. The researchers go on to state that their process wasn't really optimized and they could have gotten better results, had they used GPT-4, the latest version of the AI.The researchers have now released the 52,000 questions that were used in the research alongside the code that was used to generate them, allowing many others to repeat the process and replicate the results.

But what if someone does not really care what the chatbot says and about whom and wants it to work without filters? There are Open AI's user terms that prevent users from building competing AI and LLaMA access available only for researchers. But beyond that, there is hardly anything that could prevent one from developing their pet AI.

Guess, this is where regulation comes in. AI is racing very fast and lawmakers are really to catch up soon, else

Malaysia Latest News, Malaysia Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Stanford Scientists Pretty Much Cloned OpenAI's GPT for a Measly $600With a silly name and an even sillier startup cost, Stanford's Alpaca ChatGPT clone costs only $600 and shows how easy OpenAI's may be to replicate.

Stanford Scientists Pretty Much Cloned OpenAI's GPT for a Measly $600With a silly name and an even sillier startup cost, Stanford's Alpaca ChatGPT clone costs only $600 and shows how easy OpenAI's may be to replicate.

Read more »

Nvidia DGX Cloud: train your own ChatGPT in a web browser for $37K a monthMicrosoft, Google, and Oracle are all on board.

Nvidia DGX Cloud: train your own ChatGPT in a web browser for $37K a monthMicrosoft, Google, and Oracle are all on board.

Read more »

DefiLlama resolves internal strife, sends LLAMA token plans alpaca'nDefiLlama has put its internal conflict to rest, adding that there is no LLAMA token currently planned.

DefiLlama resolves internal strife, sends LLAMA token plans alpaca'nDefiLlama has put its internal conflict to rest, adding that there is no LLAMA token currently planned.

Read more »

China: ChatGPT success sees gaming giants swarm to invest in AIChinese gaming companies are swarming to invest in artificial intelligence-generated content (AIGC) after seeing the success of OpenAI's ChatGPT.

China: ChatGPT success sees gaming giants swarm to invest in AIChinese gaming companies are swarming to invest in artificial intelligence-generated content (AIGC) after seeing the success of OpenAI's ChatGPT.

Read more »

Rise of AI-tech like ChatGPT puts prompt engineers in the limelightThe surge of available AI tools has seen the introduction of a growing field called prompt engineering. What is this new expertise?

Rise of AI-tech like ChatGPT puts prompt engineers in the limelightThe surge of available AI tools has seen the introduction of a growing field called prompt engineering. What is this new expertise?

Read more »

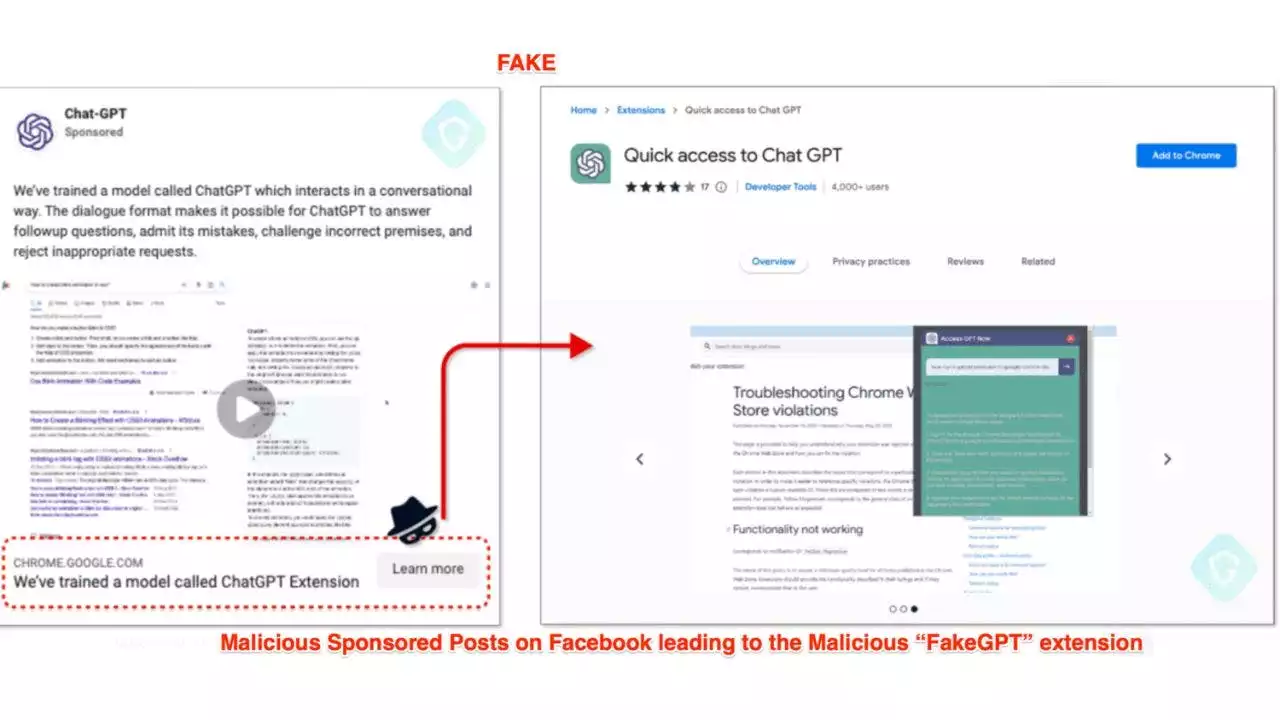

Beware of the fake ChatGPT plugin that's stealing your Facebook loginHackers are using ChatGPT's popularity to create malware targeting your information. Kurt 'CyberGuy' Knutsson explains how they do it and what you can do to be safe.

Beware of the fake ChatGPT plugin that's stealing your Facebook loginHackers are using ChatGPT's popularity to create malware targeting your information. Kurt 'CyberGuy' Knutsson explains how they do it and what you can do to be safe.

Read more »